Long Context Video Benchmark Thu

Exploring the Impact of Video Benchmarks on Contextual Understanding

In the realm of artificial intelligence and machine learning, the concept of benchmarks is crucial for evaluating and improving the performance of various systems. When it comes to video understanding, benchmarks play a pivotal role in assessing the capabilities of AI models, particularly in the context of long videos.

This blog post delves into the significance of video benchmarks, focusing on their impact on contextual understanding. By examining the challenges and advancements in this field, we aim to provide insights into how benchmarks contribute to the development of more intelligent and contextually aware AI systems.

The Role of Video Benchmarks

Video benchmarks serve as standardized evaluation tools, allowing researchers and developers to measure the performance of their AI models consistently. These benchmarks are designed to cover a wide range of video understanding tasks, including action recognition, object detection, and scene understanding.

By providing a common ground for comparison, video benchmarks facilitate the progress of AI research. They help identify areas where models excel and areas that require further improvement. This iterative process of evaluation and refinement is essential for pushing the boundaries of video understanding capabilities.

Challenges in Long Video Benchmarking

While video benchmarks have proven valuable, the task of evaluating long videos presents unique challenges. Unlike shorter clips, long videos contain more complex narratives, temporal dynamics, and contextual information. Capturing and interpreting this information accurately is crucial for developing AI systems that can understand and analyze extended video sequences.

One of the primary challenges is the computational demand of processing extensive video data. Long videos often require more extensive feature extraction and temporal modeling, which can be computationally intensive. Additionally, the need for large-scale annotated datasets becomes more pronounced when dealing with longer videos, as it is essential to train models effectively.

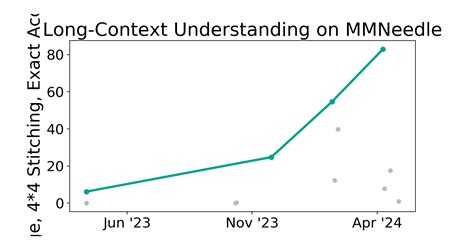

Advancements in Long Video Benchmarks

Despite the challenges, significant advancements have been made in the development of long video benchmarks. Researchers have recognized the importance of evaluating AI models on more realistic and complex video content, leading to the creation of specialized datasets and evaluation protocols.

Dataset Creation

Creating diverse and representative datasets for long video benchmarks is a critical step. These datasets often focus on capturing a wide range of real-world scenarios, including daily activities, sports events, and news broadcasts. By encompassing various contexts and temporal structures, these datasets provide a comprehensive evaluation framework.

For example, the ActivityNet dataset, a widely used benchmark for video understanding, contains over 20,000 annotated videos, covering a diverse range of activities. This dataset has been instrumental in driving research and development in long video understanding.

Evaluation Metrics

To assess the performance of AI models on long videos accurately, specialized evaluation metrics have been developed. These metrics consider the temporal dynamics and contextual understanding of the videos. For instance, the mean Average Precision (mAP) metric is commonly used to evaluate object detection performance, taking into account the temporal localization of objects.

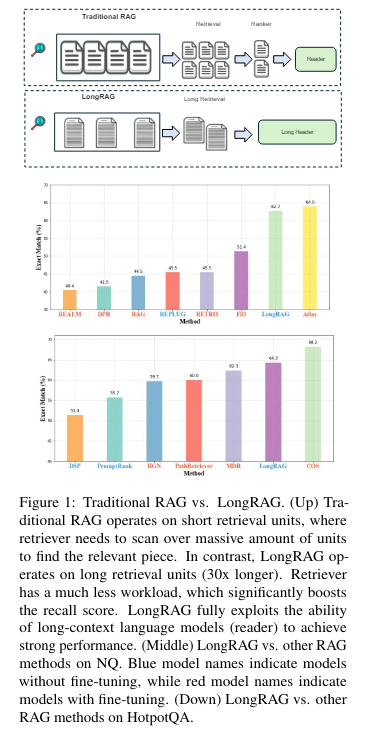

Model Architectures

The development of advanced model architectures has been a key aspect of improving long video understanding. Researchers have explored various approaches, including temporal convolutional networks, recurrent neural networks, and attention-based models, to capture the temporal dependencies and contextual information effectively.

For instance, the Temporal Segment Networks (TSN) model, proposed by researchers at the University of California, has shown promising results in action recognition tasks on long videos. TSN divides the video into segments and aggregates the information from these segments to make predictions, capturing both spatial and temporal features.

Impact on Contextual Understanding

The advancements in long video benchmarks have had a significant impact on improving contextual understanding in AI systems. By pushing the boundaries of video understanding, these benchmarks have enabled the development of models that can analyze and interpret complex narratives and temporal structures.

Contextual understanding is crucial for various applications, such as video surveillance, autonomous driving, and content recommendation systems. By training models on diverse and challenging long video benchmarks, researchers can enhance their ability to recognize patterns, anticipate events, and make informed decisions based on the context.

Future Directions

While significant progress has been made, there is still room for improvement in long video benchmarks. Some future directions and challenges include:

- Expanding the diversity of datasets to include more real-world scenarios and cultural contexts.

- Addressing the computational challenges associated with processing extensive video data.

- Developing more efficient and accurate evaluation metrics for long video understanding.

- Exploring the potential of transfer learning and pre-training on large-scale datasets to improve model performance.

- Integrating multi-modal approaches, combining visual and textual information, to enhance contextual understanding.

Conclusion

Video benchmarks, particularly for long videos, play a vital role in advancing AI research and development. By providing standardized evaluation tools and diverse datasets, these benchmarks drive the improvement of contextual understanding in AI systems. The continuous refinement of benchmarks and model architectures will lead to more intelligent and contextually aware AI, with far-reaching implications for various industries and applications.

What are the key challenges in long video benchmarking?

+Long video benchmarking faces challenges such as computational demands, the need for large-scale annotated datasets, and capturing complex temporal dynamics and contextual information.

How do video benchmarks contribute to AI development?

+Video benchmarks provide a standardized evaluation framework, allowing researchers to compare and improve AI models consistently. They drive the development of more advanced and contextually aware systems.

What are some popular long video benchmarks?

+Popular long video benchmarks include ActivityNet, Kinetics, and Something-Something. These datasets cover a wide range of activities and provide a comprehensive evaluation framework.

How do model architectures impact long video understanding?

+Advanced model architectures, such as temporal convolutional networks and attention-based models, have been crucial in capturing temporal dependencies and contextual information in long videos, leading to improved understanding.

What are the future prospects for long video benchmarks?

+Future prospects include expanding dataset diversity, addressing computational challenges, developing efficient evaluation metrics, exploring transfer learning, and integrating multi-modal approaches to enhance contextual understanding.