3. 10 Dodge Techniques: A Comprehensive Guide To Efficient Data Processing

Efficient Data Processing: Mastering the Art of Dodge Techniques

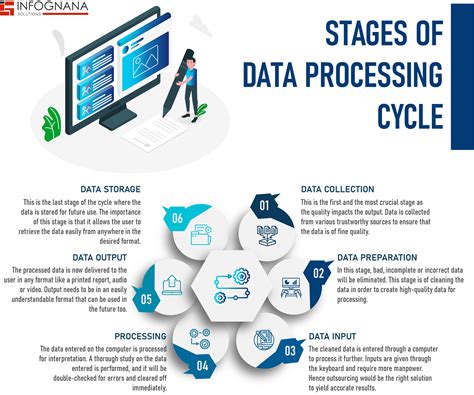

In the world of data analysis and processing, mastering efficient techniques is crucial for extracting valuable insights and making informed decisions. This comprehensive guide will introduce you to ten powerful dodge techniques, each designed to streamline your data processing workflows and enhance your analytical prowess. By adopting these strategies, you’ll be able to navigate complex datasets with ease and unlock the true potential of your data.

Understanding Dodge Techniques

Dodge techniques are essential tools in the data analyst’s toolkit, enabling them to manipulate and present data in a more meaningful and understandable way. These techniques involve clever strategies to handle various data-related challenges, from managing missing values to creating effective visualizations. By implementing dodge techniques, you can ensure that your data analysis process is not only efficient but also accurate and insightful.

Technique 1: Data Cleaning and Preparation

🌟 Note: Data cleaning is a critical step to ensure accurate analysis.

Dealing with Missing Data

- Identify missing values using appropriate functions or tools.

- Decide on a strategy: Impute, remove, or flag missing data based on your analysis goals.

- Imputation methods: Mean, median, or mode imputation can be effective for certain datasets.

Handling Outliers

- Detect outliers using statistical methods or visualizations.

- Consider the context: Decide whether to remove, transform, or analyze outliers separately.

- Transform data: Use techniques like winsorization or log transformation to handle outliers.

Technique 2: Data Transformation

⚡️ Note: Transformations can reveal hidden patterns and relationships.

Logarithmic Transformation

- Log transformation is useful for highly skewed data.

- It helps in stabilizing variance and improving the normality of the data.

- Apply the transformation using the log function.

Standardization and Normalization

- Standardization scales data to a standard normal distribution.

- Normalization scales data to a specific range, often between 0 and 1.

- Use the appropriate formulae for standardization and normalization.

Technique 3: Feature Engineering

🤖 Note: Feature engineering can enhance model performance.

Creating New Features

- Extract useful information from existing features.

- Combine features to create new, meaningful variables.

- Consider domain knowledge and data patterns when engineering features.

Handling Categorical Data

- One-hot encoding: Convert categorical variables into binary columns.

- Label encoding: Assign numerical values to categorical data.

- Handle ordinal and non-ordinal data differently.

Technique 4: Dimensionality Reduction

🔍 Note: Reduce noise and focus on relevant features.

Principal Component Analysis (PCA)

- PCA is a popular technique for reducing dimensionality.

- It identifies the most important features and combines them into new variables.

- Use the PCA function to extract principal components.

Feature Selection

- Select the most informative features based on statistical measures.

- Techniques like correlation analysis and information gain can help.

- Consider the trade-off between model complexity and performance.

Technique 5: Data Visualization

🌈 Note: Visualizations enhance data understanding.

Choosing the Right Plot Type

- Bar charts: Ideal for comparing categorical data.

- Line charts: Perfect for displaying trends over time.

- Scatter plots: Visualize relationships between two continuous variables.

Customizing Visuals

- Adjust plot aesthetics: Colors, labels, and legends.

- Use appropriate scale and axis adjustments.

- Consider adding annotations and tooltips for clarity.

Technique 6: Aggregation and Grouping

📊 Note: Summarize data for efficient analysis.

Aggregation Functions

- Sum, mean, median, and count are common aggregation functions.

- Choose the appropriate function based on your data and analysis goals.

- Use the groupby function to aggregate data by specific variables.

Grouping Data

- Group data based on categorical variables.

- Calculate summary statistics or create visualizations for each group.

- Use the pivot_table function for more complex grouping operations.

Technique 7: Filtering and Subsetting

🔎 Note: Focus on specific data subsets.

Conditional Filtering

- Use boolean expressions to filter data based on specific conditions.

- Apply filters to rows or columns as needed.

- Combine multiple conditions using logical operators.

Subsetting Data

- Extract specific rows or columns from a dataset.

- Use indexing or boolean masks to subset data.

- Consider creating a new dataset with the subset for further analysis.

Technique 8: Merging and Joining Data

🔗 Note: Combine datasets for comprehensive analysis.

Merging Datasets

- Merge datasets based on common keys or indices.

- Use the merge function for different types of merges.

- Handle missing values and duplicate entries during the merge process.

Joining Datasets

- Join datasets based on specific columns or keys.

- Use left, right, or inner joins as needed.

- Ensure data consistency and integrity during the joining process.

Technique 9: Resampling and Bootstrapping

🎲 Note: Estimate variability and confidence intervals.

Resampling Techniques

- Bootstrapping: Create multiple samples with replacement from the original data.

- Cross-validation: Divide data into training and validation sets for model evaluation.

- Stratified sampling: Ensure representation of specific groups in the sample.

Bootstrap Confidence Intervals

- Calculate confidence intervals using bootstrapped samples.

- Assess the variability and reliability of your estimates.

- Use appropriate statistical methods to interpret the results.

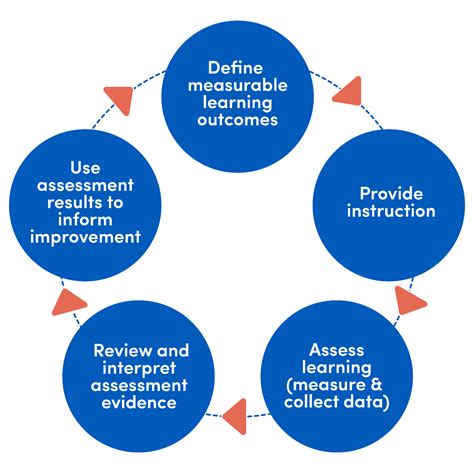

Technique 10: Model Evaluation and Validation

🧪 Note: Ensure model reliability and accuracy.

Evaluation Metrics

- Accuracy, precision, recall, and F1-score are common evaluation metrics.

- Choose metrics based on your model's purpose and the nature of the problem.

- Consider domain-specific metrics for specialized applications.

Cross-Validation

- K-fold cross-validation: Divide data into K subsets for training and validation.

- Shuffle data to ensure random distribution in each fold.

- Calculate average performance metrics for a more robust evaluation.

Conclusion

In this comprehensive guide, we’ve explored ten essential dodge techniques for efficient data processing. By mastering these techniques, you’ll be equipped to handle a wide range of data-related challenges and produce accurate, insightful analyses. Remember, efficient data processing is a continuous learning process, so stay curious, experiment with different techniques, and always strive for excellence in your data-driven endeavors.

What are some common challenges in data cleaning and preparation?

+Data cleaning challenges include handling missing values, dealing with outliers, and ensuring data consistency. These issues can impact the accuracy of your analysis, so proper handling is crucial.

How can I choose the right visualization for my data?

+Consider the nature of your data and the insights you want to convey. Bar charts are great for comparisons, line charts for trends, and scatter plots for relationships. Choose a visualization that best represents your data story.

What is the purpose of feature engineering in data analysis?

+Feature engineering involves creating new features from existing ones to improve model performance. It allows you to capture complex relationships and patterns in your data, leading to more accurate predictions and insights.

Why is data visualization important in data analysis?

+Data visualization is crucial as it helps in understanding complex data patterns and relationships. Visual representations make it easier to identify trends, outliers, and correlations, leading to more informed decision-making.

How can I ensure the reliability of my data analysis results?

+To ensure reliability, focus on proper data cleaning, transformation, and feature engineering. Additionally, use robust evaluation metrics and cross-validation techniques to assess the performance and generalizability of your models.